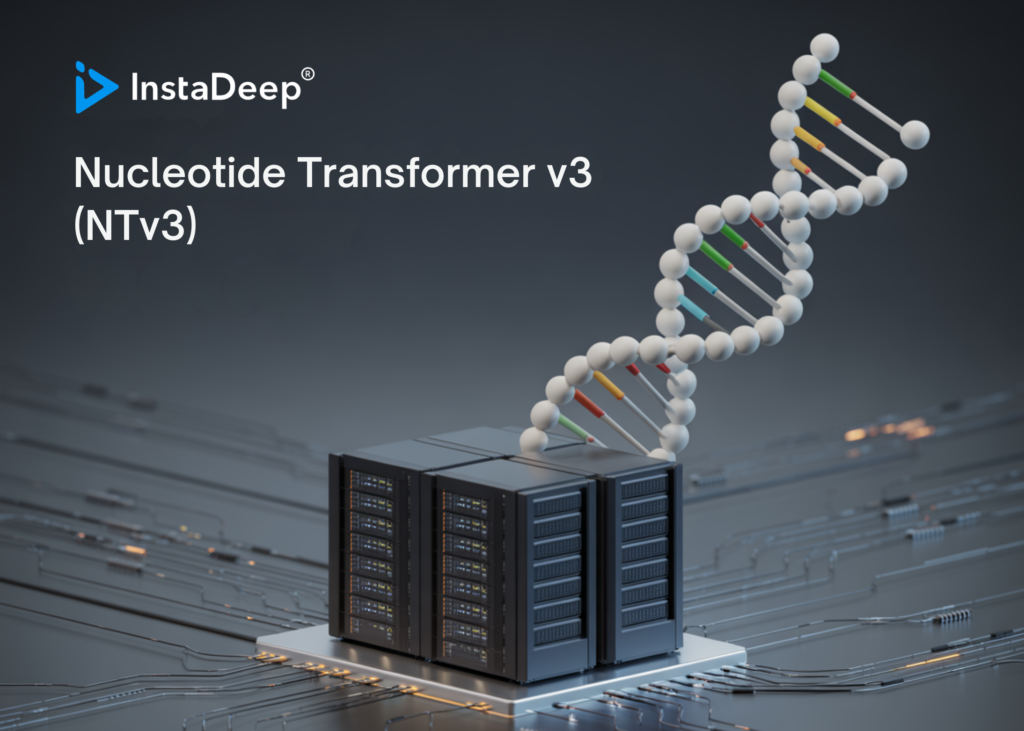

InstaDeep Introduces Nucleotide Transformer v3 (NTv3): A New Multi-Species Genomics Foundation Model, Designed for 1 Mb Context Lengths at Single-Nucleotide esolution

Genomic prediction and design now require models that connect local motifs with megabase scale regulatory context and that operate across many organisms. Nucleotide Transformer v3, or NTv3, is InstaDeep’s new multi species genomics foundation model for this setting. It unifies representation learning, functional track and genome annotation prediction, and controllable sequence generation in a single backbone that runs on 1 Mb contexts at single nucleotide resolution.

Earlier Nucleotide Transformer models already showed that self supervised pretraining on thousands of genomes yields strong features for molecular phenotype prediction. The original series included models from 50M to 2.5B parameters trained on 3,200 human genomes and 850 additional genomes from diverse species. NTv3 keeps this sequence only pretraining idea but extends it to longer contexts and adds explicit functional supervision and a generative mode.

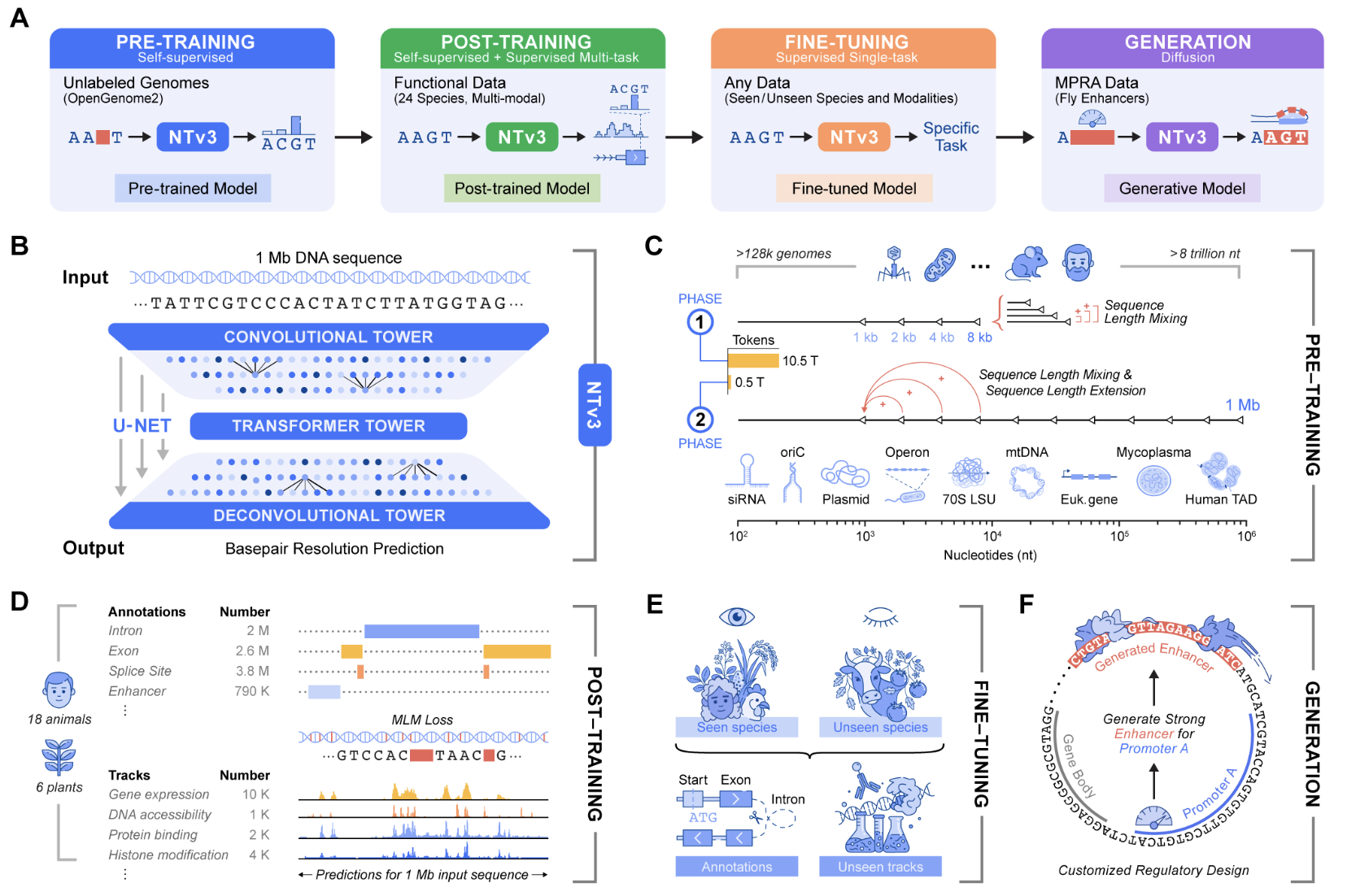

Architecture for 1 Mb genomic windows

NTv3 uses a U-Net style architecture that targets very long genomic windows. A convolutional downsampling tower compresses the input sequence, a transformer stack models long range dependencies in that compressed space, and a deconvolution tower restores base level resolution for prediction and generation. Inputs are tokenized at the character level over A, T, C, G, N with special tokens such as <unk>, <pad>, <mask>, <cls>, <eos>, and <bos>. Sequence length must be a multiple of 128 tokens, and the reference implementation uses padding to enforce this constraint. All public checkpoints use single base tokenization with a vocabulary size of 11 tokens.

The smallest public model, NTv3 8M pre, has about 7.69M parameters with hidden dimension 256, FFN dimension 1,024, 2 transformer layers, 8 attention heads, and 7 downsample stages. At the high end, NTv3 650M uses hidden dimension 1,536, FFN dimension 6,144, 12 transformer layers, 24 attention heads, and 7 downsample stages, and adds conditioning layers for species specific prediction heads.

Training data

The NTv3 model is pretrained on 9 trillion base pairs from the OpenGenome2 resource using base resolution masked language modeling. After this stage, the model is post trained with a joint objective that integrates continued self supervision with supervised learning on approximately 16,000 functional tracks and annotation labels from 24 animal and plant species.

Performance and Ntv3 Benchmark

After post training NTv3 achieves state of the art accuracy for functional track prediction and genome annotation across species. It outperforms strong sequence to function models and previous genomic foundation models on existing public benchmarks and on the new Ntv3 Benchmark, which is defined as a controlled downstream fine tuning suite with standardized 32 kb input windows and base resolution outputs.

The Ntv3 Benchmark currently consists of 106 long range, single nucleotide, cross assay, cross species tasks. Because NTv3 sees thousands of tracks across 24 species during post training, the model learns a shared regulatory grammar that transfers between organisms and assays and supports coherent long range genome to function inference.

From prediction to controllable sequence generation

Beyond prediction, NTv3 can be fine tuned into a controllable generative model via masked diffusion language modeling. In this mode the model receives conditioning signals that encode desired enhancer activity levels and promoter selectivity, and it fills masked spans in the DNA sequence in a way that is consistent with those conditions.

In experiments described in the launch materials, the team designs 1,000 enhancer sequences with specified activity and promoter specificity and validates them in vitro using STARR seq assays in collaboration with the Stark Lab. The results show that these generated enhancers recover the intended ordering of activity levels and reach more than 2 times improved promoter specificity compared with baselines.

Key Takeaways

NTv3 is a long range, multi species genomics foundation model: It unifies representation learning, functional track prediction, genome annotation, and controllable sequence generation in a single U Net style architecture that supports 1 Mb nucleotide resolution context across 24 animal and plant species.

The model is trained on 9 trillion base pairs with joint self supervised and supervised objectives: NTv3 is pretrained on 9 trillion base pairs from OpenGenome2 with base resolution masked language modeling, then post trained on more than 16,000 functional tracks and annotation labels from 24 species using a joint objective that mixes continued self supervision with supervised learning.

NTv3 achieves state of the art performance on the Ntv3 Benchmark: After post training, NTv3 reaches state of the art accuracy for functional track prediction and genome annotation across species and outperforms previous sequence to function models and genomics foundation models on public benchmarks and on the Ntv3 Benchmark, which contains 106 standardized long range downstream tasks with 32 kb input and base resolution outputs.

The same backbone supports controllable enhancer design validated with STARR seq: NTv3 can be fine tuned as a controllable generative model using masked diffusion language modeling to design enhancer sequences with specified activity levels and promoter selectivity, and these designs are validated experimentally with STARR seq assays that confirm the intended activity ordering and improved promoter specificity.

Check out the Repo, Model on HF and Technical details. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.